Auto status change to "Under Review"

Pull request !2405

Created on

Tue, 13 Feb 2024 04:51:24,

- setup: change url to github

- readme: provide better descriptions

- ini: disable secure cookie by default

- setup.py: include additional package data

- README: mention getappenlight.com documentation

1 version available for this pull request,

show versions.

v1

|

|

||||

| ver | Time | Author | Commit | Description | ||

|---|---|---|---|---|---|---|

| 32 commits hidden, click expand to show them. | ||||||

The requested changes are too big and content was truncated. Show full diff

| @@ -9,13 +9,13 b' notifications:' | |||

|

|

9 | 9 | matrix: |

|

|

10 | 10 | include: |

|

|

11 | 11 | - python: 3.5 |

|

|

12 | env: TOXENV=py35 | |

|

|

12 | env: TOXENV=py35 ES_VERSION=6.6.2 ES_DOWNLOAD_URL=https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-oss-${ES_VERSION}.tar.gz | |

|

|

13 | 13 | - python: 3.6 |

|

|

14 | env: TOXENV=py36 | |

|

|

14 | env: TOXENV=py36 ES_VERSION=6.6.2 ES_DOWNLOAD_URL=https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-oss-${ES_VERSION}.tar.gz | |

|

|

15 | 15 | addons: |

|

|

16 | 16 | postgresql: "9.6" |

|

|

17 | 17 | - python: 3.6 |

|

|

18 | env: TOXENV=py36 PGPORT=5432 | |

|

|

18 | env: TOXENV=py36 PGPORT=5432 ES_VERSION=6.6.2 ES_DOWNLOAD_URL=https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-oss-${ES_VERSION}.tar.gz | |

|

|

19 | 19 | addons: |

|

|

20 | 20 | postgresql: "10" |

|

|

21 | 21 | apt: |

| @@ -24,14 +24,16 b' matrix:' | |||

|

|

24 | 24 | - postgresql-client-10 |

|

|

25 | 25 | |

|

|

26 | 26 | install: |

|

|

27 | - wget ${ES_DOWNLOAD_URL} | |

|

|

28 | - tar -xzf elasticsearch-oss-${ES_VERSION}.tar.gz | |

|

|

29 | - ./elasticsearch-${ES_VERSION}/bin/elasticsearch & | |

|

|

27 | 30 | - travis_retry pip install -U setuptools pip tox |

|

|

28 | 31 | |

|

|

29 | 32 | script: |

|

|

30 | - travis_retry tox | |

|

|

33 | - travis_retry tox -- -vv | |

|

|

31 | 34 | |

|

|

32 | 35 | services: |

|

|

33 | 36 | - postgresql |

|

|

34 | - elasticsearch | |

|

|

35 | 37 | - redis |

|

|

36 | 38 | |

|

|

37 | 39 | before_script: |

| @@ -1,4 +1,9 b'' | |||

|

|

1 | Visit: | |

|

|

1 | # AppEnlight | |

|

|

2 | ||

|

|

3 | Performance, exception, and uptime monitoring for the Web | |

|

|

2 | 4 | |

|

|

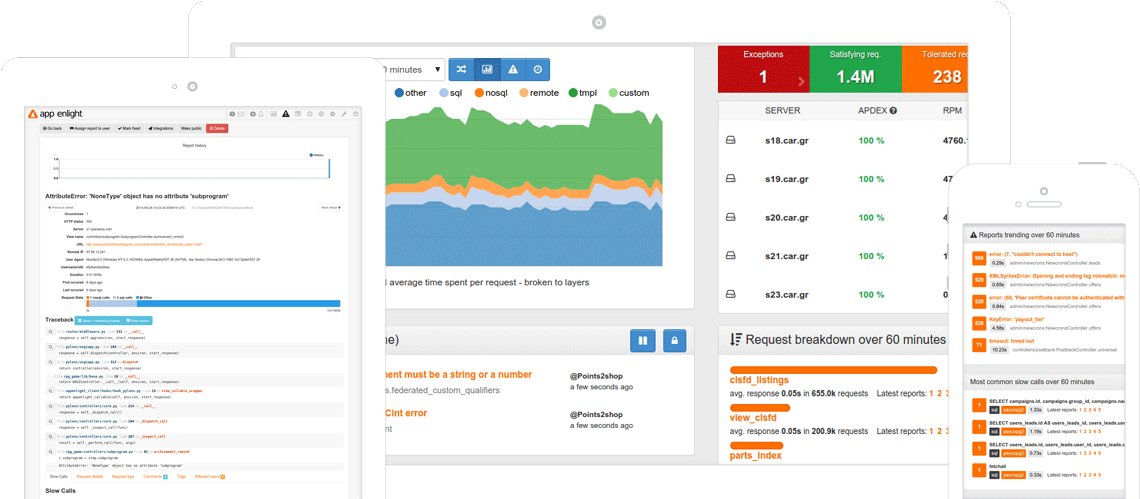

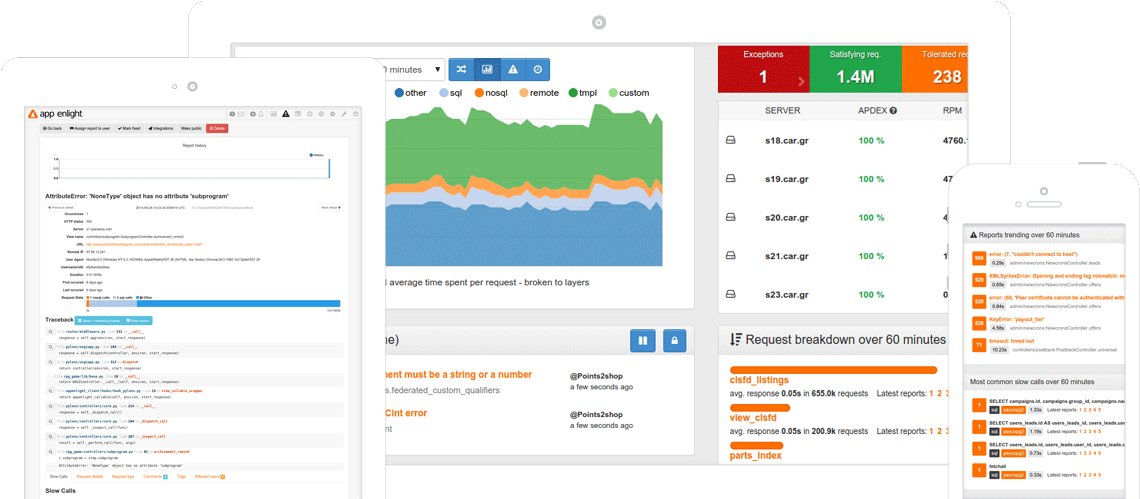

5 |  | |

|

|

6 | ||

|

|

7 | Visit: | |

|

|

3 | 8 | |

|

|

4 | 9 | [Readme moved to backend directory](backend/README.md) |

| @@ -14,6 +14,13 b' The format is based on [Keep a Changelog](https://keepachangelog.com/en/1.0.0/).' | |||

|

|

14 | 14 | <!-- ### Fixed --> |

|

|

15 | 15 | |

|

|

16 | 16 | |

|

|

17 | ## [2.0.0rc1 - 2019-04-13] | |

|

|

18 | ### Changed | |

|

|

19 | * require Elasticsearch 6.x | |

|

|

20 | * move data structure to single document per index | |

|

|

21 | * got rid of bower and moved to npm in build process | |

|

|

22 | * updated angular packages to new versions | |

|

|

23 | ||

|

|

17 | 24 | ## [1.2.0 - 2019-03-17] |

|

|

18 | 25 | ### Changed |

|

|

19 | 26 | * Replaced elasticsearch client |

| @@ -1,2 +1,2 b'' | |||

|

|

1 | 1 | include *.txt *.ini *.cfg *.rst *.md VERSION |

|

|

2 |

recursive-include |

|

|

|

2 | recursive-include src *.ico *.png *.css *.gif *.jpg *.pt *.txt *.mak *.mako *.js *.html *.xml *.jinja2 *.rst *.otf *.ttf *.svg *.woff *.woff2 *.eot | |

| @@ -1,20 +1,26 b'' | |||

|

|

1 | 1 | AppEnlight |

|

|

2 | 2 | ----------- |

|

|

3 | 3 | |

|

|

4 | Performance, exception, and uptime monitoring for the Web | |

|

|

5 | ||

|

|

6 |  | |

|

|

7 | ||

|

|

4 | 8 | Automatic Installation |

|

|

5 | 9 | ====================== |

|

|

6 | 10 | |

|

|

7 | Use the ansible scripts in the `automation` repository to build complete instance of application | |

|

|

11 | Use the ansible or vagrant scripts in the `automation` repository to build complete instance of application. | |

|

|

8 | 12 | You can also use `packer` files in `automation/packer` to create whole VM's for KVM and VMWare. |

|

|

9 | 13 | |

|

|

14 | https://github.com/AppEnlight/automation | |

|

|

15 | ||

|

|

10 | 16 | Manual Installation |

|

|

11 | 17 | =================== |

|

|

12 | 18 | |

|

|

13 | 19 | To run the app you need to have meet prerequsites: |

|

|

14 | 20 | |

|

|

15 | - python 3.5+ | |

|

|

16 |

- running elasticsearch ( |

|

|

|

17 | - running postgresql (9.5+ required) | |

|

|

21 | - python 3.5+ (currently 3.6 tested) | |

|

|

22 | - running elasticsearch (6.6.2 tested) | |

|

|

23 | - running postgresql (9.5+ required, tested 9.6 and 10.6) | |

|

|

18 | 24 | - running redis |

|

|

19 | 25 | |

|

|

20 | 26 | Install the app by performing |

| @@ -25,41 +31,42 b' Install the app by performing' | |||

|

|

25 | 31 | |

|

|

26 | 32 | Install the appenlight uptime plugin (`ae_uptime_ce` package from `appenlight-uptime-ce` repository). |

|

|

27 | 33 | |

|

|

28 | After installing the application you need to perform following steps: | |

|

|

34 | For production usage you can do: | |

|

|

29 | 35 | |

|

|

30 | 1. (optional) generate production.ini (or use a copy of development.ini) | |

|

|

36 | pip install appenlight | |

|

|

37 | pip install ae_uptime_ce | |

|

|

31 | 38 | |

|

|

32 | 39 | |

|

|

33 | appenlight-make-config production.ini | |

|

|

40 | After installing the application you need to perform following steps: | |

|

|

41 | ||

|

|

42 | 1. (optional) generate production.ini (or use a copy of development.ini) | |

|

|

34 | 43 | |

|

|

35 | 2. Setup database structure: | |

|

|

44 | appenlight-make-config production.ini | |

|

|

36 | 45 | |

|

|

46 | 2. Setup database structure (replace filename with the name you picked for `appenlight-make-config`): | |

|

|

37 | 47 | |

|

|

38 | appenlight-migratedb -c FILENAME.ini | |

|

|

48 | appenlight-migratedb -c FILENAME.ini | |

|

|

39 | 49 | |

|

|

40 | 50 | 3. To configure elasticsearch: |

|

|

41 | 51 | |

|

|

42 | ||

|

|

43 | appenlight-reindex-elasticsearch -t all -c FILENAME.ini | |

|

|

52 | appenlight-reindex-elasticsearch -t all -c FILENAME.ini | |

|

|

44 | 53 | |

|

|

45 | 54 | 4. Create base database objects |

|

|

46 | 55 | |

|

|

47 | 56 | (run this command with help flag to see how to create administrator user) |

|

|

48 | 57 | |

|

|

49 | ||

|

|

50 | appenlight-initializedb -c FILENAME.ini | |

|

|

58 | appenlight-initializedb -c FILENAME.ini | |

|

|

51 | 59 | |

|

|

52 | 60 | 5. Generate static assets |

|

|

53 | 61 | |

|

|

54 | ||

|

|

55 | appenlight-static -c FILENAME.ini | |

|

|

62 | appenlight-static -c FILENAME.ini | |

|

|

56 | 63 | |

|

|

57 | 64 | Running application |

|

|

58 | 65 | =================== |

|

|

59 | 66 | |

|

|

60 | 67 | To run the main app: |

|

|

61 | 68 | |

|

|

62 |

pserve |

|

|

|

69 | pserve FILENAME.ini | |

|

|

63 | 70 | |

|

|

64 | 71 | To run celery workers: |

|

|

65 | 72 | |

| @@ -69,17 +76,23 b' To run celery beat:' | |||

|

|

69 | 76 | |

|

|

70 | 77 | celery beat -A appenlight.celery --ini FILENAME.ini |

|

|

71 | 78 | |

|

|

72 | To run appenlight's uptime plugin: | |

|

|

79 | To run appenlight's uptime plugin (example of uptime plugin config can be found here | |

|

|

80 | https://github.com/AppEnlight/appenlight-uptime-ce ): | |

|

|

73 | 81 | |

|

|

74 | appenlight-uptime-monitor -c FILENAME.ini | |

|

|

82 | appenlight-uptime-monitor -c UPTIME_PLUGIN_CONFIG_FILENAME.ini | |

|

|

75 | 83 | |

|

|

76 | 84 | Real-time Notifications |

|

|

77 | 85 | ======================= |

|

|

78 | 86 | |

|

|

79 | 87 | You should also run the `channelstream websocket server for real-time notifications |

|

|

80 | 88 | |

|

|

81 | channelstream -i filename.ini | |

|

|

82 | ||

|

|

89 | channelstream -i CHANELSTRAM_CONFIG_FILENAME.ini | |

|

|

90 | ||

|

|

91 | Additional documentation | |

|

|

92 | ======================== | |

|

|

93 | ||

|

|

94 | Visit https://getappenlight.com for additional server and client documentation. | |

|

|

95 | ||

|

|

83 | 96 | Testing |

|

|

84 | 97 | ======= |

|

|

85 | 98 | |

| @@ -95,11 +108,5 b' To develop appenlight frontend:' | |||

|

|

95 | 108 | |

|

|

96 | 109 | cd frontend |

|

|

97 | 110 | npm install |

|

|

98 | bower install | |

|

|

99 | 111 | grunt watch |

|

|

100 | 112 | |

|

|

101 | ||

|

|

102 | Tagging release | |

|

|

103 | =============== | |

|

|

104 | ||

|

|

105 | bumpversion --current-version 1.1.1 minor --verbose --tag --commit --dry-run | |

| @@ -36,7 +36,7 b' pygments==2.3.1' | |||

|

|

36 | 36 | lxml==4.3.2 |

|

|

37 | 37 | paginate==0.5.6 |

|

|

38 | 38 | paginate-sqlalchemy==0.3.0 |

|

|

39 |

elasticsearch>= |

|

|

|

39 | elasticsearch>=6.0.0,<7.0.0 | |

|

|

40 | 40 | mock==1.0.1 |

|

|

41 | 41 | itsdangerous==1.1.0 |

|

|

42 | 42 | camplight==0.9.6 |

| @@ -16,7 +16,10 b' def parse_req(req):' | |||

|

|

16 | 16 | return compiled.search(req).group(1).strip() |

|

|

17 | 17 | |

|

|

18 | 18 | |

|

|

19 | requires = [_f for _f in map(parse_req, REQUIREMENTS) if _f] | |

|

|

19 | if "APPENLIGHT_DEVELOP" in os.environ: | |

|

|

20 | requires = [_f for _f in map(parse_req, REQUIREMENTS) if _f] | |

|

|

21 | else: | |

|

|

22 | requires = REQUIREMENTS | |

|

|

20 | 23 | |

|

|

21 | 24 | |

|

|

22 | 25 | def _get_meta_var(name, data, callback_handler=None): |

| @@ -33,30 +36,37 b' def _get_meta_var(name, data, callback_handler=None):' | |||

|

|

33 | 36 | with open(os.path.join(here, "src", "appenlight", "__init__.py"), "r") as _meta: |

|

|

34 | 37 | _metadata = _meta.read() |

|

|

35 | 38 | |

|

|

36 | with open(os.path.join(here, "VERSION"), "r") as _meta_version: | |

|

|

37 | __version__ = _meta_version.read().strip() | |

|

|

38 | ||

|

|

39 | 39 | __license__ = _get_meta_var("__license__", _metadata) |

|

|

40 | 40 | __author__ = _get_meta_var("__author__", _metadata) |

|

|

41 | 41 | __url__ = _get_meta_var("__url__", _metadata) |

|

|

42 | 42 | |

|

|

43 | 43 | found_packages = find_packages("src") |

|

|

44 | found_packages.append("appenlight.migrations") | |

|

|

44 | 45 | found_packages.append("appenlight.migrations.versions") |

|

|

45 | 46 | setup( |

|

|

46 | 47 | name="appenlight", |

|

|

47 | 48 | description="appenlight", |

|

|

48 |

long_description=README |

|

|

|

49 | long_description=README, | |

|

|

49 | 50 | classifiers=[ |

|

|

51 | "Framework :: Pyramid", | |

|

|

52 | "License :: OSI Approved :: Apache Software License", | |

|

|

50 | 53 | "Programming Language :: Python", |

|

|

51 | "Framework :: Pylons", | |

|

|

54 | "Programming Language :: Python :: 3 :: Only", | |

|

|

55 | "Programming Language :: Python :: 3.6", | |

|

|

56 | "Topic :: System :: Monitoring", | |

|

|

57 | "Topic :: Software Development", | |

|

|

58 | "Topic :: Software Development :: Bug Tracking", | |

|

|

59 | "Topic :: Internet :: Log Analysis", | |

|

|

52 | 60 | "Topic :: Internet :: WWW/HTTP", |

|

|

53 | 61 | "Topic :: Internet :: WWW/HTTP :: WSGI :: Application", |

|

|

54 | 62 | ], |

|

|

55 | version=__version__, | |

|

|

63 | version="2.0.0rc1", | |

|

|

56 | 64 | license=__license__, |

|

|

57 | 65 | author=__author__, |

|

|

58 | url=__url__, | |

|

|

59 | keywords="web wsgi bfg pylons pyramid", | |

|

|

66 | url="https://github.com/AppEnlight/appenlight", | |

|

|

67 | keywords="web wsgi bfg pylons pyramid flask django monitoring apm instrumentation appenlight", | |

|

|

68 | python_requires=">=3.5", | |

|

|

69 | long_description_content_type="text/markdown", | |

|

|

60 | 70 | package_dir={"": "src"}, |

|

|

61 | 71 | packages=found_packages, |

|

|

62 | 72 | include_package_data=True, |

| @@ -239,7 +239,7 b' def add_reports(resource_id, request_params, dataset, **kwargs):' | |||

|

|

239 | 239 | @celery.task(queue="es", default_retry_delay=600, max_retries=144) |

|

|

240 | 240 | def add_reports_es(report_group_docs, report_docs): |

|

|

241 | 241 | for k, v in report_group_docs.items(): |

|

|

242 |

to_update = {"_index": k, "_type": "report |

|

|

|

242 | to_update = {"_index": k, "_type": "report"} | |

|

|

243 | 243 | [i.update(to_update) for i in v] |

|

|

244 | 244 | elasticsearch.helpers.bulk(Datastores.es, v) |

|

|

245 | 245 | for k, v in report_docs.items(): |

| @@ -259,7 +259,7 b' def add_reports_slow_calls_es(es_docs):' | |||

|

|

259 | 259 | @celery.task(queue="es", default_retry_delay=600, max_retries=144) |

|

|

260 | 260 | def add_reports_stats_rows_es(es_docs): |

|

|

261 | 261 | for k, v in es_docs.items(): |

|

|

262 |

to_update = {"_index": k, "_type": " |

|

|

|

262 | to_update = {"_index": k, "_type": "report"} | |

|

|

263 | 263 | [i.update(to_update) for i in v] |

|

|

264 | 264 | elasticsearch.helpers.bulk(Datastores.es, v) |

|

|

265 | 265 | |

| @@ -287,7 +287,7 b' def add_logs(resource_id, request_params, dataset, **kwargs):' | |||

|

|

287 | 287 | if entry["primary_key"] is None: |

|

|

288 | 288 | es_docs[log_entry.partition_id].append(log_entry.es_doc()) |

|

|

289 | 289 | |

|

|

290 |

# 2nd pass to delete all log entries from db fo |

|

|

|

290 | # 2nd pass to delete all log entries from db for same pk/ns pair | |

|

|

291 | 291 | if ns_pairs: |

|

|

292 | 292 | ids_to_delete = [] |

|

|

293 | 293 | es_docs = collections.defaultdict(list) |

| @@ -325,10 +325,11 b' def add_logs(resource_id, request_params, dataset, **kwargs):' | |||

|

|

325 | 325 | query = {"query": {"terms": {"delete_hash": batch}}} |

|

|

326 | 326 | |

|

|

327 | 327 | try: |

|

|

328 |

Datastores.es. |

|

|

|

329 |

|

|

|

|

330 |

|

|

|

|

328 | Datastores.es.delete_by_query( | |

|

|

329 | index=es_index, | |

|

|

330 | doc_type="log", | |

|

|

331 | 331 | body=query, |

|

|

332 | conflicts="proceed", | |

|

|

332 | 333 | ) |

|

|

333 | 334 | except elasticsearch.exceptions.NotFoundError as exc: |

|

|

334 | 335 | msg = "skipping index {}".format(es_index) |

| @@ -689,11 +690,7 b' def alerting_reports():' | |||

|

|

689 | 690 | def logs_cleanup(resource_id, filter_settings): |

|

|

690 | 691 | request = get_current_request() |

|

|

691 | 692 | request.tm.begin() |

|

|

692 | es_query = { | |

|

|

693 | "query": { | |

|

|

694 | "bool": {"filter": [{"term": {"resource_id": resource_id}}]} | |

|

|

695 | } | |

|

|

696 | } | |

|

|

693 | es_query = {"query": {"bool": {"filter": [{"term": {"resource_id": resource_id}}]}}} | |

|

|

697 | 694 | |

|

|

698 | 695 | query = DBSession.query(Log).filter(Log.resource_id == resource_id) |

|

|

699 | 696 | if filter_settings["namespace"]: |

| @@ -703,6 +700,6 b' def logs_cleanup(resource_id, filter_settings):' | |||

|

|

703 | 700 | ) |

|

|

704 | 701 | query.delete(synchronize_session=False) |

|

|

705 | 702 | request.tm.commit() |

|

|

706 |

Datastores.es. |

|

|

|

707 | "DELETE", "/{}/{}/_query".format("rcae_l_*", "log"), body=es_query | |

|

|

703 | Datastores.es.delete_by_query( | |

|

|

704 | index="rcae_l_*", doc_type="log", body=es_query, conflicts="proceed" | |

|

|

708 | 705 | ) |

| @@ -208,7 +208,7 b' def es_index_name_limiter(' | |||

|

|

208 | 208 | elif t == "metrics": |

|

|

209 | 209 | es_index_types.append("rcae_m_%s") |

|

|

210 | 210 | elif t == "uptime": |

|

|

211 | es_index_types.append("rcae_u_%s") | |

|

|

211 | es_index_types.append("rcae_uptime_ce_%s") | |

|

|

212 | 212 | elif t == "slow_calls": |

|

|

213 | 213 | es_index_types.append("rcae_sc_%s") |

|

|

214 | 214 | |

| @@ -552,7 +552,9 b' def get_es_info(cache_regions, es_conn):' | |||

|

|

552 | 552 | @cache_regions.memory_min_10.cache_on_arguments() |

|

|

553 | 553 | def get_es_info_cached(): |

|

|

554 | 554 | returned_info = {"raw_info": es_conn.info()} |

|

|

555 |

returned_info["version"] = returned_info["raw_info"]["version"]["number"].split( |

|

|

|

555 | returned_info["version"] = returned_info["raw_info"]["version"]["number"].split( | |

|

|

556 | "." | |

|

|

557 | ) | |

|

|

556 | 558 | return returned_info |

|

|

557 | 559 | |

|

|

558 | 560 | return get_es_info_cached() |

| @@ -112,7 +112,7 b' class Log(Base, BaseModel):' | |||

|

|

112 | 112 | else None, |

|

|

113 | 113 | } |

|

|

114 | 114 | return { |

|

|

115 |

" |

|

|

|

115 | "log_id": str(self.log_id), | |

|

|

116 | 116 | "delete_hash": self.delete_hash, |

|

|

117 | 117 | "resource_id": self.resource_id, |

|

|

118 | 118 | "request_id": self.request_id, |

| @@ -60,6 +60,7 b' class Metric(Base, BaseModel):' | |||

|

|

60 | 60 | } |

|

|

61 | 61 | |

|

|

62 | 62 | return { |

|

|

63 | "metric_id": self.pkey, | |

|

|

63 | 64 | "resource_id": self.resource_id, |

|

|

64 | 65 | "timestamp": self.timestamp, |

|

|

65 | 66 | "namespace": self.namespace, |

| @@ -181,7 +181,7 b' class Report(Base, BaseModel):' | |||

|

|

181 | 181 | request_data = data.get("request", {}) |

|

|

182 | 182 | |

|

|

183 | 183 | self.request = request_data |

|

|

184 |

self.request_stats = data.get("request_stats" |

|

|

|

184 | self.request_stats = data.get("request_stats") or {} | |

|

|

185 | 185 | traceback = data.get("traceback") |

|

|

186 | 186 | if not traceback: |

|

|

187 | 187 | traceback = data.get("frameinfo") |

| @@ -314,7 +314,7 b' class Report(Base, BaseModel):' | |||

|

|

314 | 314 | "bool": { |

|

|

315 | 315 | "filter": [ |

|

|

316 | 316 | {"term": {"group_id": self.group_id}}, |

|

|

317 |

{"range": {" |

|

|

|

317 | {"range": {"report_id": {"lt": self.id}}}, | |

|

|

318 | 318 | ] |

|

|

319 | 319 | } |

|

|

320 | 320 | }, |

| @@ -324,7 +324,7 b' class Report(Base, BaseModel):' | |||

|

|

324 | 324 | body=query, index=self.partition_id, doc_type="report" |

|

|

325 | 325 | ) |

|

|

326 | 326 | if result["hits"]["total"]: |

|

|

327 |

return result["hits"]["hits"][0]["_source"][" |

|

|

|

327 | return result["hits"]["hits"][0]["_source"]["report_id"] | |

|

|

328 | 328 | |

|

|

329 | 329 | def get_next_in_group(self, request): |

|

|

330 | 330 | query = { |

| @@ -333,7 +333,7 b' class Report(Base, BaseModel):' | |||

|

|

333 | 333 | "bool": { |

|

|

334 | 334 | "filter": [ |

|

|

335 | 335 | {"term": {"group_id": self.group_id}}, |

|

|

336 |

{"range": {" |

|

|

|

336 | {"range": {"report_id": {"gt": self.id}}}, | |

|

|

337 | 337 | ] |

|

|

338 | 338 | } |

|

|

339 | 339 | }, |

| @@ -343,7 +343,7 b' class Report(Base, BaseModel):' | |||

|

|

343 | 343 | body=query, index=self.partition_id, doc_type="report" |

|

|

344 | 344 | ) |

|

|

345 | 345 | if result["hits"]["total"]: |

|

|

346 |

return result["hits"]["hits"][0]["_source"][" |

|

|

|

346 | return result["hits"]["hits"][0]["_source"]["report_id"] | |

|

|

347 | 347 | |

|

|

348 | 348 | def get_public_url(self, request=None, report_group=None, _app_url=None): |

|

|

349 | 349 | """ |

| @@ -469,7 +469,7 b' class Report(Base, BaseModel):' | |||

|

|

469 | 469 | tags["user_name"] = {"value": [self.username], "numeric_value": None} |

|

|

470 | 470 | return { |

|

|

471 | 471 | "_id": str(self.id), |

|

|

472 |

" |

|

|

|

472 | "report_id": str(self.id), | |

|

|

473 | 473 | "resource_id": self.resource_id, |

|

|

474 | 474 | "http_status": self.http_status or "", |

|

|

475 | 475 | "start_time": self.start_time, |

| @@ -482,9 +482,11 b' class Report(Base, BaseModel):' | |||

|

|

482 | 482 | "request_id": self.request_id, |

|

|

483 | 483 | "ip": self.ip, |

|

|

484 | 484 | "group_id": str(self.group_id), |

|

|

485 | "_parent": str(self.group_id), | |

|

|

485 | "type": "report", | |

|

|

486 | "join_field": {"name": "report", "parent": str(self.group_id)}, | |

|

|

486 | 487 | "tags": tags, |

|

|

487 | 488 | "tag_list": tag_list, |

|

|

489 | "_routing": str(self.group_id), | |

|

|

488 | 490 | } |

|

|

489 | 491 | |

|

|

490 | 492 | @property |

| @@ -518,9 +520,12 b' def after_update(mapper, connection, target):' | |||

|

|

518 | 520 | |

|

|

519 | 521 | def after_delete(mapper, connection, target): |

|

|

520 | 522 | if not hasattr(target, "_skip_ft_index"): |

|

|

521 |

query = {"query": {"term": {" |

|

|

|

522 |

Datastores.es. |

|

|

|

523 | "DELETE", "/{}/{}/_query".format(target.partition_id, "report"), body=query | |

|

|

523 | query = {"query": {"term": {"report_id": target.id}}} | |

|

|

524 | Datastores.es.delete_by_query( | |

|

|

525 | index=target.partition_id, | |

|

|

526 | doc_type="report", | |

|

|

527 | body=query, | |

|

|

528 | conflicts="proceed", | |

|

|

524 | 529 | ) |

|

|

525 | 530 | |

|

|

526 | 531 | |

| @@ -178,7 +178,7 b' class ReportGroup(Base, BaseModel):' | |||

|

|

178 | 178 | def es_doc(self): |

|

|

179 | 179 | return { |

|

|

180 | 180 | "_id": str(self.id), |

|

|

181 |

"p |

|

|

|

181 | "group_id": str(self.id), | |

|

|

182 | 182 | "resource_id": self.resource_id, |

|

|

183 | 183 | "error": self.error, |

|

|

184 | 184 | "fixed": self.fixed, |

| @@ -190,6 +190,8 b' class ReportGroup(Base, BaseModel):' | |||

|

|

190 | 190 | "summed_duration": self.summed_duration, |

|

|

191 | 191 | "first_timestamp": self.first_timestamp, |

|

|

192 | 192 | "last_timestamp": self.last_timestamp, |

|

|

193 | "type": "report_group", | |

|

|

194 | "join_field": {"name": "report_group"}, | |

|

|

193 | 195 | } |

|

|

194 | 196 | |

|

|

195 | 197 | def set_notification_info(self, notify_10=False, notify_100=False): |

| @@ -258,27 +260,21 b' def after_insert(mapper, connection, target):' | |||

|

|

258 | 260 | if not hasattr(target, "_skip_ft_index"): |

|

|

259 | 261 | data = target.es_doc() |

|

|

260 | 262 | data.pop("_id", None) |

|

|

261 |

Datastores.es.index(target.partition_id, "report |

|

|

|

263 | Datastores.es.index(target.partition_id, "report", data, id=target.id) | |

|

|

262 | 264 | |

|

|

263 | 265 | |

|

|

264 | 266 | def after_update(mapper, connection, target): |

|

|

265 | 267 | if not hasattr(target, "_skip_ft_index"): |

|

|

266 | 268 | data = target.es_doc() |

|

|

267 | 269 | data.pop("_id", None) |

|

|

268 |

Datastores.es.index(target.partition_id, "report |

|

|

|

270 | Datastores.es.index(target.partition_id, "report", data, id=target.id) | |

|

|

269 | 271 | |

|

|

270 | 272 | |

|

|

271 | 273 | def after_delete(mapper, connection, target): |

|

|

272 | 274 | query = {"query": {"term": {"group_id": target.id}}} |

|

|

273 | 275 | # delete by query |

|

|

274 |

Datastores.es. |

|

|

|

275 | "DELETE", "/{}/{}/_query".format(target.partition_id, "report"), body=query | |

|

|

276 | ) | |

|

|

277 | query = {"query": {"term": {"pg_id": target.id}}} | |

|

|

278 | Datastores.es.transport.perform_request( | |

|

|

279 | "DELETE", | |

|

|

280 | "/{}/{}/_query".format(target.partition_id, "report_group"), | |

|

|

281 | body=query, | |

|

|

276 | Datastores.es.delete_by_query( | |

|

|

277 | index=target.partition_id, doc_type="report", body=query, conflicts="proceed" | |

|

|

282 | 278 | ) |

|

|

283 | 279 | |

|

|

284 | 280 | |

| @@ -48,12 +48,13 b' class ReportStat(Base, BaseModel):' | |||

|

|

48 | 48 | return { |

|

|

49 | 49 | "resource_id": self.resource_id, |

|

|

50 | 50 | "timestamp": self.start_interval, |

|

|

51 |

" |

|

|

|

51 | "report_stat_id": str(self.id), | |

|

|

52 | 52 | "permanent": True, |

|

|

53 | 53 | "request_id": None, |

|

|

54 | 54 | "log_level": "ERROR", |

|

|

55 | 55 | "message": None, |

|

|

56 | 56 | "namespace": "appenlight.error", |

|

|

57 | "group_id": str(self.group_id), | |

|

|

57 | 58 | "tags": { |

|

|

58 | 59 | "duration": {"values": self.duration, "numeric_values": self.duration}, |

|

|

59 | 60 | "occurences": { |

| @@ -76,4 +77,5 b' class ReportStat(Base, BaseModel):' | |||

|

|

76 | 77 | "server_name", |

|

|

77 | 78 | "view_name", |

|

|

78 | 79 | ], |

|

|

80 | "type": "report_stat", | |

|

|

79 | 81 | } |

| @@ -56,11 +56,7 b' class LogService(BaseService):' | |||

|

|

56 | 56 | filter_settings = {} |

|

|

57 | 57 | |

|

|

58 | 58 | query = { |

|

|

59 | "query": { | |

|

|

60 | "bool": { | |

|

|

61 | "filter": [{"terms": {"resource_id": list(app_ids)}}] | |

|

|

62 | } | |

|

|

63 | } | |

|

|

59 | "query": {"bool": {"filter": [{"terms": {"resource_id": list(app_ids)}}]}} | |

|

|

64 | 60 | } |

|

|

65 | 61 | |

|

|

66 | 62 | start_date = filter_settings.get("start_date") |

| @@ -132,13 +128,13 b' class LogService(BaseService):' | |||

|

|

132 | 128 | |

|

|

133 | 129 | @classmethod |

|

|

134 | 130 | def get_search_iterator( |

|

|

135 |

|

|

|

|

136 |

|

|

|

|

137 |

|

|

|

|

138 |

|

|

|

|

139 |

|

|

|

|

140 |

|

|

|

|

141 |

|

|

|

|

131 | cls, | |

|

|

132 | app_ids=None, | |

|

|

133 | page=1, | |

|

|

134 | items_per_page=50, | |

|

|

135 | order_by=None, | |

|

|

136 | filter_settings=None, | |

|

|

137 | limit=None, | |

|

|

142 | 138 | ): |

|

|

143 | 139 | if not app_ids: |

|

|

144 | 140 | return {}, 0 |

| @@ -171,15 +167,15 b' class LogService(BaseService):' | |||

|

|

171 | 167 | |

|

|

172 | 168 | @classmethod |

|

|

173 | 169 | def get_paginator_by_app_ids( |

|

|

174 |

|

|

|

|

175 |

|

|

|

|

176 |

|

|

|

|

177 |

|

|

|

|

178 |

|

|

|

|

179 |

|

|

|

|

180 |

|

|

|

|

181 |

|

|

|

|

182 |

|

|

|

|

170 | cls, | |

|

|

171 | app_ids=None, | |

|

|

172 | page=1, | |

|

|

173 | item_count=None, | |

|

|

174 | items_per_page=50, | |

|

|

175 | order_by=None, | |

|

|

176 | filter_settings=None, | |

|

|

177 | exclude_columns=None, | |

|

|

178 | db_session=None, | |

|

|

183 | 179 | ): |

|

|

184 | 180 | if not filter_settings: |

|

|

185 | 181 | filter_settings = {} |

| @@ -190,7 +186,7 b' class LogService(BaseService):' | |||

|

|

190 | 186 | [], item_count=item_count, items_per_page=items_per_page, **filter_settings |

|

|

191 | 187 | ) |

|

|

192 | 188 | ordered_ids = tuple( |

|

|

193 |

item["_source"][" |

|

|

|

189 | item["_source"]["log_id"] for item in results.get("hits", []) | |

|

|

194 | 190 | ) |

|

|

195 | 191 | |

|

|

196 | 192 | sorted_instance_list = [] |

| @@ -64,23 +64,21 b' class ReportGroupService(BaseService):' | |||

|

|

64 | 64 | "groups": { |

|

|

65 | 65 | "aggs": { |

|

|

66 | 66 | "sub_agg": { |

|

|

67 |

"value_count": { |

|

|

|

67 | "value_count": { | |

|

|

68 | "field": "tags.group_id.values.keyword" | |

|

|

69 | } | |

|

|

68 | 70 | } |

|

|

69 | 71 | }, |

|

|

70 | 72 | "filter": {"exists": {"field": "tags.group_id.values"}}, |

|

|

71 | 73 | } |

|

|

72 | 74 | }, |

|

|

73 | "terms": {"field": "tags.group_id.values", "size": limit}, | |

|

|

75 | "terms": {"field": "tags.group_id.values.keyword", "size": limit}, | |

|

|

74 | 76 | } |

|

|

75 | 77 | }, |

|

|

76 | 78 | "query": { |

|

|

77 | 79 | "bool": { |

|

|

78 | 80 | "filter": [ |

|

|

79 | { | |

|

|

80 | "terms": { | |

|

|

81 | "resource_id": [filter_settings["resource"][0]] | |

|

|

82 | } | |

|

|

83 | }, | |

|

|

81 | {"terms": {"resource_id": [filter_settings["resource"][0]]}}, | |

|

|

84 | 82 | { |

|

|

85 | 83 | "range": { |

|

|

86 | 84 | "timestamp": { |

| @@ -97,7 +95,7 b' class ReportGroupService(BaseService):' | |||

|

|

97 | 95 | es_query["query"]["bool"]["filter"].extend(tags) |

|

|

98 | 96 | |

|

|

99 | 97 | result = Datastores.es.search( |

|

|

100 |

body=es_query, index=index_names, doc_type=" |

|

|

|

98 | body=es_query, index=index_names, doc_type="report", size=0 | |

|

|

101 | 99 | ) |

|

|

102 | 100 | series = [] |

|

|

103 | 101 | for bucket in result["aggregations"]["parent_agg"]["buckets"]: |

| @@ -136,14 +134,14 b' class ReportGroupService(BaseService):' | |||

|

|

136 | 134 | "bool": { |

|

|

137 | 135 | "must": [], |

|

|

138 | 136 | "should": [], |

|

|

139 | "filter": [{"terms": {"resource_id": list(app_ids)}}] | |

|

|

137 | "filter": [{"terms": {"resource_id": list(app_ids)}}], | |

|

|

140 | 138 | } |

|

|

141 | 139 | }, |

|

|

142 | 140 | "aggs": { |

|

|

143 | 141 | "top_groups": { |

|

|

144 | 142 | "terms": { |

|

|

145 | 143 | "size": 5000, |

|

|

146 |

"field": " |

|

|

|

144 | "field": "join_field#report_group", | |

|

|

147 | 145 | "order": {"newest": "desc"}, |

|

|

148 | 146 | }, |

|

|

149 | 147 | "aggs": { |

| @@ -315,7 +313,9 b' class ReportGroupService(BaseService):' | |||

|

|

315 | 313 | ordered_ids = [] |

|

|

316 | 314 | if results: |

|

|

317 | 315 | for item in results["top_groups"]["buckets"]: |

|

|

318 |

pg_id = item["top_reports_hits"]["hits"]["hits"][0]["_source"][ |

|

|

|

316 | pg_id = item["top_reports_hits"]["hits"]["hits"][0]["_source"][ | |

|

|

317 | "report_id" | |

|

|

318 | ] | |

|

|

319 | 319 | ordered_ids.append(pg_id) |

|

|

320 | 320 | log.info(filter_settings) |

|

|

321 | 321 | paginator = paginate.Page( |

| @@ -445,10 +445,16 b' class ReportGroupService(BaseService):' | |||

|

|

445 | 445 | "aggs": { |

|

|

446 | 446 | "types": { |

|

|

447 | 447 | "aggs": { |

|

|

448 |

"sub_agg": { |

|

|

|

448 | "sub_agg": { | |

|

|

449 | "terms": {"field": "tags.type.values.keyword"} | |

|

|

450 | } | |

|

|

449 | 451 | }, |

|

|

450 | 452 | "filter": { |

|

|

451 |

" |

|

|

|

453 | "bool": { | |

|

|

454 | "filter": [ | |

|

|

455 | {"exists": {"field": "tags.type.values"}} | |

|

|

456 | ] | |

|

|

457 | } | |

|

|

452 | 458 | }, |

|

|

453 | 459 | } |

|

|

454 | 460 | }, |

| @@ -466,11 +472,7 b' class ReportGroupService(BaseService):' | |||

|

|

466 | 472 | "query": { |

|

|

467 | 473 | "bool": { |

|

|

468 | 474 | "filter": [ |

|

|

469 | { | |

|

|

470 | "terms": { | |

|

|

471 | "resource_id": [filter_settings["resource"][0]] | |

|

|

472 | } | |

|

|

473 | }, | |

|

|

475 | {"terms": {"resource_id": [filter_settings["resource"][0]]}}, | |

|

|

474 | 476 | { |

|

|

475 | 477 | "range": { |

|

|

476 | 478 | "timestamp": { |

| @@ -485,7 +487,7 b' class ReportGroupService(BaseService):' | |||

|

|

485 | 487 | } |

|

|

486 | 488 | if group_id: |

|

|

487 | 489 | parent_agg = es_query["aggs"]["parent_agg"] |

|

|

488 |

filters = parent_agg["aggs"]["types"]["filter"][" |

|

|

|

490 | filters = parent_agg["aggs"]["types"]["filter"]["bool"]["filter"] | |

|

|

489 | 491 | filters.append({"terms": {"tags.group_id.values": [group_id]}}) |

|

|

490 | 492 | |

|

|

491 | 493 | index_names = es_index_name_limiter( |

| @@ -31,13 +31,17 b' class ReportStatService(BaseService):' | |||

|

|

31 | 31 | "aggs": { |

|

|

32 | 32 | "reports": { |

|

|

33 | 33 | "aggs": { |

|

|

34 | "sub_agg": {"value_count": {"field": "tags.group_id.values"}} | |

|

|

34 | "sub_agg": { | |

|

|

35 | "value_count": {"field": "tags.group_id.values.keyword"} | |

|

|

36 | } | |

|

|

35 | 37 | }, |

|

|

36 | 38 | "filter": { |

|

|

37 |

" |

|

|

|

38 |

|

|

|

|

39 |

{" |

|

|

|

40 | ] | |

|

|

39 | "bool": { | |

|

|

40 | "filter": [ | |

|

|

41 | {"terms": {"resource_id": [resource_id]}}, | |

|

|

42 | {"exists": {"field": "tags.group_id.values"}}, | |

|

|

43 | ] | |

|

|

44 | } | |

|

|

41 | 45 | }, |

|

|

42 | 46 | } |

|

|

43 | 47 | }, |

| @@ -142,11 +142,7 b' class RequestMetricService(BaseService):' | |||

|

|

142 | 142 | "query": { |

|

|

143 | 143 | "bool": { |

|

|

144 | 144 | "filter": [ |

|

|

145 | { | |

|

|

146 | "terms": { | |

|

|

147 | "resource_id": [filter_settings["resource"][0]] | |

|

|

148 | } | |

|

|

149 | }, | |

|

|

145 | {"terms": {"resource_id": [filter_settings["resource"][0]]}}, | |

|

|

150 | 146 | { |

|

|

151 | 147 | "range": { |

|

|

152 | 148 | "timestamp": { |

| @@ -235,6 +231,8 b' class RequestMetricService(BaseService):' | |||

|

|

235 | 231 | script_text = "doc['tags.main.numeric_values'].value / {}".format( |

|

|

236 | 232 | total_time_spent |

|

|

237 | 233 | ) |

|

|

234 | if total_time_spent == 0: | |

|

|

235 | script_text = "0" | |

|

|

238 | 236 | |

|

|

239 | 237 | if index_names and filter_settings["resource"]: |

|

|

240 | 238 | es_query = { |

| @@ -252,14 +250,7 b' class RequestMetricService(BaseService):' | |||

|

|

252 | 250 | }, |

|

|

253 | 251 | }, |

|

|

254 | 252 | "percentage": { |

|

|

255 | "aggs": { | |

|

|

256 | "sub_agg": { | |

|

|

257 | "sum": { | |

|

|

258 | "lang": "expression", | |

|

|

259 | "script": script_text, | |

|

|

260 | } | |

|

|

261 | } | |

|

|

262 | }, | |

|

|

253 | "aggs": {"sub_agg": {"sum": {"script": script_text}}}, | |

|

|

263 | 254 | "filter": { |

|

|

264 | 255 | "exists": {"field": "tags.main.numeric_values"} |

|

|

265 | 256 | }, |

| @@ -276,7 +267,7 b' class RequestMetricService(BaseService):' | |||

|

|

276 | 267 | }, |

|

|

277 | 268 | }, |

|

|

278 | 269 | "terms": { |

|

|

279 | "field": "tags.view_name.values", | |

|

|

270 | "field": "tags.view_name.values.keyword", | |

|

|

280 | 271 | "order": {"percentage>sub_agg": "desc"}, |

|

|

281 | 272 | "size": 15, |

|

|

282 | 273 | }, |

| @@ -317,7 +308,10 b' class RequestMetricService(BaseService):' | |||

|

|

317 | 308 | query = { |

|

|

318 | 309 | "aggs": { |

|

|

319 | 310 | "top_reports": { |

|

|

320 | "terms": {"field": "tags.view_name.values", "size": len(series)}, | |

|

|

311 | "terms": { | |

|

|

312 | "field": "tags.view_name.values.keyword", | |

|

|

313 | "size": len(series), | |

|

|

314 | }, | |

|

|

321 | 315 | "aggs": { |

|

|

322 | 316 | "top_calls_hits": { |

|

|

323 | 317 | "top_hits": {"sort": {"start_time": "desc"}, "size": 5} |

| @@ -339,7 +333,7 b' class RequestMetricService(BaseService):' | |||

|

|

339 | 333 | for hit in bucket["top_calls_hits"]["hits"]["hits"]: |

|

|

340 | 334 | details[bucket["key"]].append( |

|

|

341 | 335 | { |

|

|

342 |

"report_id": hit["_source"][" |

|

|

|

336 | "report_id": hit["_source"]["report_id"], | |

|

|

343 | 337 | "group_id": hit["_source"]["group_id"], |

|

|

344 | 338 | } |

|

|

345 | 339 | ) |

| @@ -390,18 +384,22 b' class RequestMetricService(BaseService):' | |||

|

|

390 | 384 | } |

|

|

391 | 385 | }, |

|

|

392 | 386 | "filter": { |

|

|

393 |

" |

|

|

|

394 |

|

|

|

|

395 |

|

|

|

|

396 |

" |

|

|

|

397 |

|

|

|

|

398 |

|

|

|

|

399 |

|

|

|

|

400 |

|

|

|

|

401 |

|

|

|

|

402 |

|

|

|

|

403 |

|

|

|

|

404 | ] | |

|

|

387 | "bool": { | |

|

|

388 | "filter": [ | |

|

|

389 | { | |

|

|

390 | "range": { | |

|

|

391 | "tags.main.numeric_values": { | |

|

|

392 | "gte": "4" | |

|

|

393 | } | |

|

|

394 | } | |

|

|

395 | }, | |

|

|

396 | { | |

|

|

397 | "exists": { | |

|

|

398 | "field": "tags.requests.numeric_values" | |

|

|

399 | } | |

|

|

400 | }, | |

|

|

401 | ] | |

|

|

402 | } | |

|

|

405 | 403 | }, |

|

|

406 | 404 | }, |

|

|

407 | 405 | "main": { |

| @@ -431,27 +429,36 b' class RequestMetricService(BaseService):' | |||

|

|

431 | 429 | } |

|

|

432 | 430 | }, |

|

|

433 | 431 | "filter": { |

|

|

434 |

" |

|

|

|

435 |

|

|

|

|

436 |

|

|

|

|

437 |

" |

|

|

|

438 |

|

|

|

|

439 |

|

|

|

|

440 |

|

|

|

|

441 |

|

|

|

|

442 |

|

|

|

|

443 |

|

|

|

|

444 |

|

|

|

|

445 | { | |

|

|

446 |

" |

|

|

|

447 |

|

|

|

|

448 | } | |

|

|

449 | }, | |

|

|

450 |

|

|

|

|

432 | "bool": { | |

|

|

433 | "filter": [ | |

|

|

434 | { | |

|

|

435 | "range": { | |

|

|

436 | "tags.main.numeric_values": { | |

|

|

437 | "gte": "1" | |

|

|

438 | } | |

|

|

439 | } | |

|

|

440 | }, | |

|

|

441 | { | |

|

|

442 | "range": { | |

|

|

443 | "tags.main.numeric_values": { | |

|

|

444 | "lt": "4" | |

|

|

445 | } | |

|

|

446 | } | |

|

|

447 | }, | |

|

|

448 | { | |

|

|

449 | "exists": { | |

|

|

450 | "field": "tags.requests.numeric_values" | |

|

|

451 | } | |

|

|

452 | }, | |

|

|

453 | ] | |

|

|

454 | } | |

|

|

451 | 455 | }, |

|

|

452 | 456 | }, |

|

|

453 | 457 | }, |

|

|

454 | "terms": {"field": "tags.server_name.values", "size": 999999}, | |

|

|

458 | "terms": { | |

|

|

459 | "field": "tags.server_name.values.keyword", | |

|

|

460 | "size": 999999, | |

|

|

461 | }, | |

|

|

455 | 462 | } |

|

|

456 | 463 | }, |

|

|

457 | 464 | "query": { |

| @@ -517,18 +524,27 b' class RequestMetricService(BaseService):' | |||

|

|

517 | 524 | } |

|

|

518 | 525 | }, |

|

|

519 | 526 | "filter": { |

|

|

520 |

" |

|

|

|

521 |

|

|

|

|

522 | { | |

|

|

523 |

" |

|

|

|

524 |

" |

|

|

|

525 | } | |

|

|

526 | }, | |

|

|

527 |

|

|

|

|

527 | "bool": { | |

|

|

528 | "filter": [ | |

|

|

529 | { | |

|

|

530 | "terms": { | |

|

|

531 | "tags.type.values": [report_type] | |

|

|

532 | } | |

|

|

533 | }, | |

|

|

534 | { | |

|

|

535 | "exists": { | |

|

|

536 | "field": "tags.occurences.numeric_values" | |

|

|

537 | } | |

|

|

538 | }, | |

|

|

539 | ] | |

|

|

540 | } | |

|

|

528 | 541 | }, |

|

|

529 | 542 | } |

|

|

530 | 543 | }, |

|

|

531 | "terms": {"field": "tags.server_name.values", "size": 999999}, | |

|

|

544 | "terms": { | |

|

|

545 | "field": "tags.server_name.values.keyword", | |

|

|

546 | "size": 999999, | |

|

|

547 | }, | |

|

|

532 | 548 | } |

|

|

533 | 549 | }, |

|

|

534 | 550 | "query": { |

| @@ -50,7 +50,7 b' class SlowCallService(BaseService):' | |||

|

|

50 | 50 | "aggs": { |

|

|

51 | 51 | "sub_agg": { |

|

|

52 | 52 | "value_count": { |

|

|

53 | "field": "tags.statement_hash.values" | |

|

|

53 | "field": "tags.statement_hash.values.keyword" | |

|

|

54 | 54 | } |

|

|

55 | 55 | } |

|

|

56 | 56 | }, |

| @@ -60,7 +60,7 b' class SlowCallService(BaseService):' | |||

|

|

60 | 60 | }, |

|

|

61 | 61 | }, |

|

|

62 | 62 | "terms": { |

|

|

63 | "field": "tags.statement_hash.values", | |

|

|

63 | "field": "tags.statement_hash.values.keyword", | |

|

|

64 | 64 | "order": {"duration>sub_agg": "desc"}, |

|

|

65 | 65 | "size": 15, |

|

|

66 | 66 | }, |

| @@ -98,7 +98,10 b' class SlowCallService(BaseService):' | |||

|

|

98 | 98 | calls_query = { |

|

|

99 | 99 | "aggs": { |

|

|

100 | 100 | "top_calls": { |

|

|

101 | "terms": {"field": "tags.statement_hash.values", "size": 15}, | |

|

|

101 | "terms": { | |

|

|

102 | "field": "tags.statement_hash.values.keyword", | |

|

|

103 | "size": 15, | |

|

|

104 | }, | |

|

|

102 | 105 | "aggs": { |

|

|

103 | 106 | "top_calls_hits": { |

|

|

104 | 107 | "top_hits": {"sort": {"timestamp": "desc"}, "size": 5} |

| @@ -109,11 +112,7 b' class SlowCallService(BaseService):' | |||

|

|

109 | 112 | "query": { |

|

|

110 | 113 | "bool": { |

|

|

111 | 114 | "filter": [ |

|

|

112 | { | |

|

|

113 | "terms": { | |

|

|

114 | "resource_id": [filter_settings["resource"][0]] | |

|

|

115 | } | |

|

|

116 | }, | |

|

|

115 | {"terms": {"resource_id": [filter_settings["resource"][0]]}}, | |

|

|

117 | 116 | {"terms": {"tags.statement_hash.values": hashes}}, |

|

|

118 | 117 | { |

|

|

119 | 118 | "range": { |

| @@ -88,7 +88,7 b' class SlowCall(Base, BaseModel):' | |||

|

|

88 | 88 | doc = { |

|

|

89 | 89 | "resource_id": self.resource_id, |

|

|

90 | 90 | "timestamp": self.timestamp, |

|

|

91 |

" |

|

|

|

91 | "slow_call_id": str(self.id), | |

|

|

92 | 92 | "permanent": False, |

|

|

93 | 93 | "request_id": None, |

|

|

94 | 94 | "log_level": "UNKNOWN", |

| @@ -17,6 +17,7 b'' | |||

|

|

17 | 17 | import argparse |

|

|

18 | 18 | import datetime |

|

|

19 | 19 | import logging |

|

|

20 | import copy | |

|

|

20 | 21 | |

|

|

21 | 22 | import sqlalchemy as sa |

|

|

22 | 23 | import elasticsearch.exceptions |

| @@ -34,7 +35,6 b' from appenlight.models.log import Log' | |||

|

|

34 | 35 | from appenlight.models.slow_call import SlowCall |

|

|

35 | 36 | from appenlight.models.metric import Metric |

|

|

36 | 37 | |

|

|

37 | ||

|

|

38 | 38 | log = logging.getLogger(__name__) |

|

|

39 | 39 | |

|

|

40 | 40 | tables = { |

| @@ -128,7 +128,20 b' def main():' | |||

|

|

128 | 128 | |

|

|

129 | 129 | def update_template(): |

|

|

130 | 130 | try: |

|

|

131 | Datastores.es.indices.delete_template("rcae") | |

|

|

131 | Datastores.es.indices.delete_template("rcae_reports") | |

|

|

132 | except elasticsearch.exceptions.NotFoundError as e: | |

|

|

133 | log.error(e) | |

|

|

134 | ||

|

|

135 | try: | |

|

|

136 | Datastores.es.indices.delete_template("rcae_logs") | |

|

|

137 | except elasticsearch.exceptions.NotFoundError as e: | |

|

|

138 | log.error(e) | |

|

|

139 | try: | |

|

|

140 | Datastores.es.indices.delete_template("rcae_slow_calls") | |

|

|

141 | except elasticsearch.exceptions.NotFoundError as e: | |

|

|

142 | log.error(e) | |

|

|

143 | try: | |

|

|

144 | Datastores.es.indices.delete_template("rcae_metrics") | |

|

|

132 | 145 | except elasticsearch.exceptions.NotFoundError as e: |

|

|

133 | 146 | log.error(e) |

|

|

134 | 147 | log.info("updating elasticsearch template") |

| @@ -139,7 +152,13 b' def update_template():' | |||

|

|

139 | 152 | "mapping": { |

|

|

140 | 153 | "type": "object", |

|

|

141 | 154 | "properties": { |

|

|

142 |

"values": { |

|

|

|

155 | "values": { | |

|

|

156 | "type": "text", | |

|

|

157 | "analyzer": "tag_value", | |

|

|

158 | "fields": { | |

|

|

159 | "keyword": {"type": "keyword", "ignore_above": 256} | |

|

|

160 | }, | |

|

|

161 | }, | |

|

|

143 | 162 | "numeric_values": {"type": "float"}, |

|

|

144 | 163 | }, |

|

|

145 | 164 | }, |

| @@ -147,40 +166,69 b' def update_template():' | |||

|

|

147 | 166 | } |

|

|

148 | 167 | ] |

|

|

149 | 168 | |

|

|

150 | template_schema = { | |

|

|

151 | "template": "rcae_*", | |

|

|

169 | shared_analysis = { | |

|

|

170 | "analyzer": { | |

|

|

171 | "url_path": { | |

|

|

172 | "type": "custom", | |

|

|

173 | "char_filter": [], | |

|

|

174 | "tokenizer": "path_hierarchy", | |

|

|

175 | "filter": [], | |

|

|

176 | }, | |

|

|

177 | "tag_value": { | |

|

|

178 | "type": "custom", | |

|

|

179 | "char_filter": [], | |

|

|

180 | "tokenizer": "keyword", | |

|

|

181 | "filter": ["lowercase"], | |

|

|

182 | }, | |

|

|

183 | } | |

|

|

184 | } | |

|

|

185 | ||

|

|

186 | shared_log_mapping = { | |

|

|

187 | "_all": {"enabled": False}, | |

|

|

188 | "dynamic_templates": tag_templates, | |

|

|

189 | "properties": { | |

|

|

190 | "pg_id": {"type": "keyword", "index": True}, | |

|

|

191 | "delete_hash": {"type": "keyword", "index": True}, | |

|

|

192 | "resource_id": {"type": "integer"}, | |

|

|

193 | "timestamp": {"type": "date"}, | |

|

|

194 | "permanent": {"type": "boolean"}, | |

|

|

195 | "request_id": {"type": "keyword", "index": True}, | |

|

|

196 | "log_level": {"type": "text", "analyzer": "simple"}, | |

|

|

197 | "message": {"type": "text", "analyzer": "simple"}, | |

|

|

198 | "namespace": { | |

|

|

199 | "type": "text", | |

|

|

200 | "fields": {"keyword": {"type": "keyword", "ignore_above": 256}}, | |

|

|

201 | }, | |

|

|

202 | "tags": {"type": "object"}, | |

|

|

203 | "tag_list": { | |

|

|

204 | "type": "text", | |

|

|

205 | "analyzer": "tag_value", | |

|

|

206 | "fields": {"keyword": {"type": "keyword", "ignore_above": 256}}, | |

|

|

207 | }, | |

|

|

208 | }, | |

|

|

209 | } | |

|

|

210 | ||

|

|

211 | report_schema = { | |

|

|

212 | "template": "rcae_r_*", | |

|

|

152 | 213 | "settings": { |

|

|

153 | 214 | "index": { |

|

|

154 | 215 | "refresh_interval": "5s", |

|

|

155 | 216 | "translog": {"sync_interval": "5s", "durability": "async"}, |

|

|

156 | 217 | }, |

|

|

157 | 218 | "number_of_shards": 5, |

|

|

158 |

"analysis": |

|

|

|

159 | "analyzer": { | |

|

|

160 | "url_path": { | |

|

|

161 | "type": "custom", | |

|

|

162 | "char_filter": [], | |

|

|

163 | "tokenizer": "path_hierarchy", | |

|

|

164 | "filter": [], | |

|

|

165 | }, | |

|

|

166 | "tag_value": { | |

|

|

167 | "type": "custom", | |

|

|

168 | "char_filter": [], | |

|

|

169 | "tokenizer": "keyword", | |

|

|

170 | "filter": ["lowercase"], | |

|

|

171 | }, | |

|

|

172 | } | |

|

|

173 | }, | |

|

|

219 | "analysis": shared_analysis, | |

|

|

174 | 220 | }, |

|

|

175 | 221 | "mappings": { |

|

|

176 |

"report |

|

|

|

222 | "report": { | |

|

|

177 | 223 | "_all": {"enabled": False}, |

|

|

178 | 224 | "dynamic_templates": tag_templates, |

|

|

179 | 225 | "properties": { |

|

|

180 |

" |

|

|

|

226 | "type": {"type": "keyword", "index": True}, | |

|

|

227 | # report group | |

|

|

228 | "group_id": {"type": "keyword", "index": True}, | |

|

|

181 | 229 | "resource_id": {"type": "integer"}, |

|

|

182 | 230 | "priority": {"type": "integer"}, |

|

|

183 |

"error": {"type": " |

|

|

|

231 | "error": {"type": "text", "analyzer": "simple"}, | |

|

|

184 | 232 | "read": {"type": "boolean"}, |

|

|

185 | 233 | "occurences": {"type": "integer"}, |

|

|

186 | 234 | "fixed": {"type": "boolean"}, |

| @@ -189,58 +237,132 b' def update_template():' | |||

|

|

189 | 237 | "average_duration": {"type": "float"}, |

|

|

190 | 238 | "summed_duration": {"type": "float"}, |

|

|

191 | 239 | "public": {"type": "boolean"}, |

|

|

192 |

|

|

|

|

193 | }, | |

|

|

194 | "report": { | |

|

|

195 | "_all": {"enabled": False}, | |

|

|

196 | "dynamic_templates": tag_templates, | |

|

|

197 | "properties": { | |

|

|

198 | "pg_id": {"type": "string", "index": "not_analyzed"}, | |

|

|

199 | "resource_id": {"type": "integer"}, | |

|

|

200 | "group_id": {"type": "string"}, | |

|

|

240 | # report | |

|

|

241 | "report_id": {"type": "keyword", "index": True}, | |

|

|

201 | 242 | "http_status": {"type": "integer"}, |

|

|

202 |

"ip": {"type": " |

|

|

|

203 |

"url_domain": {"type": " |

|

|

|

204 |

"url_path": {"type": " |

|

|

|

205 | "error": {"type": "string", "analyzer": "simple"}, | |

|

|

243 | "ip": {"type": "keyword", "index": True}, | |

|

|

244 | "url_domain": {"type": "text", "analyzer": "simple"}, | |

|

|

245 | "url_path": {"type": "text", "analyzer": "url_path"}, | |

|

|

206 | 246 | "report_type": {"type": "integer"}, |

|

|

207 | 247 | "start_time": {"type": "date"}, |

|

|

208 |

"request_id": {"type": " |

|

|

|

248 | "request_id": {"type": "keyword", "index": True}, | |

|

|

209 | 249 | "end_time": {"type": "date"}, |

|

|

210 | 250 | "duration": {"type": "float"}, |

|

|

211 | 251 | "tags": {"type": "object"}, |

|

|

212 |

"tag_list": { |

|

|

|

252 | "tag_list": { | |

|

|

253 | "type": "text", | |

|

|

254 | "analyzer": "tag_value", | |

|

|

255 | "fields": {"keyword": {"type": "keyword", "ignore_above": 256}}, | |

|

|

256 | }, | |

|

|

213 | 257 | "extra": {"type": "object"}, |

|

|

214 |

|

|

|

|

215 |

" |

|

|

|

216 | }, | |

|

|

217 | "log": { | |

|

|

218 | "_all": {"enabled": False}, | |

|

|

219 | "dynamic_templates": tag_templates, | |

|

|

220 | "properties": { | |

|

|

221 | "pg_id": {"type": "string", "index": "not_analyzed"}, | |

|

|

222 | "delete_hash": {"type": "string", "index": "not_analyzed"}, | |

|

|

223 | "resource_id": {"type": "integer"}, | |

|

|

258 | # report stats | |

|

|

259 | "report_stat_id": {"type": "keyword", "index": True}, | |

|

|

224 | 260 | "timestamp": {"type": "date"}, |

|

|

225 | 261 | "permanent": {"type": "boolean"}, |

|

|

226 |

" |

|

|

|

227 |

" |

|

|

|

228 | "message": {"type": "string", "analyzer": "simple"}, | |

|

|

229 | "namespace": {"type": "string", "index": "not_analyzed"}, | |

|

|

230 |

" |

|

|

|

231 | "tag_list": {"type": "string", "analyzer": "tag_value"}, | |

|

|

262 | "log_level": {"type": "text", "analyzer": "simple"}, | |

|

|

263 | "message": {"type": "text", "analyzer": "simple"}, | |

|

|

264 | "namespace": { | |

|

|

265 | "type": "text", | |

|

|

266 | "fields": {"keyword": {"type": "keyword", "ignore_above": 256}}, | |

|

|

267 | }, | |

|

|

268 | "join_field": { | |

|

|

269 | "type": "join", | |

|

|

270 | "relations": {"report_group": ["report", "report_stat"]}, | |

|

|

271 | }, | |

|

|

232 | 272 | }, |

|

|

273 | } | |

|

|

274 | }, | |

|

|

275 | } | |

|

|

276 | ||

|

|

277 | Datastores.es.indices.put_template("rcae_reports", body=report_schema) | |

|

|

278 | ||

|

|

279 | logs_mapping = copy.deepcopy(shared_log_mapping) | |

|

|

280 | logs_mapping["properties"]["log_id"] = logs_mapping["properties"]["pg_id"] | |

|

|

281 | del logs_mapping["properties"]["pg_id"] | |

|

|

282 | ||

|

|

283 | log_template = { | |

|

|

284 | "template": "rcae_l_*", | |

|

|

285 | "settings": { | |

|

|

286 | "index": { | |

|

|

287 | "refresh_interval": "5s", | |

|

|

288 | "translog": {"sync_interval": "5s", "durability": "async"}, | |

|

|

233 | 289 | }, |

|

|

290 | "number_of_shards": 5, | |

|

|

291 | "analysis": shared_analysis, | |

|

|

234 | 292 | }, |

|

|

293 | "mappings": {"log": logs_mapping}, | |

|

|

235 | 294 | } |

|

|

236 | 295 | |

|

|

237 |

Datastores.es.indices.put_template("rcae", body=template |

|

|

|

296 | Datastores.es.indices.put_template("rcae_logs", body=log_template) | |

|

|

297 | ||

|

|

298 | slow_call_mapping = copy.deepcopy(shared_log_mapping) | |

|

|

299 | slow_call_mapping["properties"]["slow_call_id"] = slow_call_mapping["properties"][ | |

|

|

300 | "pg_id" | |

|

|

301 | ] | |

|

|

302 | del slow_call_mapping["properties"]["pg_id"] | |

|

|

303 | ||

|

|

304 | slow_call_template = { | |

|

|

305 | "template": "rcae_sc_*", | |

|

|

306 | "settings": { | |

|

|

307 | "index": { | |

|

|

308 | "refresh_interval": "5s", | |

|

|

309 | "translog": {"sync_interval": "5s", "durability": "async"}, | |

|

|

310 | }, | |

|

|

311 | "number_of_shards": 5, | |

|

|

312 | "analysis": shared_analysis, | |

|

|

313 | }, | |

|

|

314 | "mappings": {"log": slow_call_mapping}, | |

|

|

315 | } | |

|

|

316 | ||

|

|

317 | Datastores.es.indices.put_template("rcae_slow_calls", body=slow_call_template) | |

|

|

318 | ||

|

|

319 | metric_mapping = copy.deepcopy(shared_log_mapping) | |

|

|

320 | metric_mapping["properties"]["metric_id"] = metric_mapping["properties"]["pg_id"] | |

|

|

321 | del metric_mapping["properties"]["pg_id"] | |

|

|

322 | ||

|

|

323 | metrics_template = { | |

|

|

324 | "template": "rcae_m_*", | |

|

|

325 | "settings": { | |

|

|

326 | "index": { | |

|

|

327 | "refresh_interval": "5s", | |

|

|

328 | "translog": {"sync_interval": "5s", "durability": "async"}, | |

|

|

329 | }, | |

|

|

330 | "number_of_shards": 5, | |

|

|

331 | "analysis": shared_analysis, | |

|

|

332 | }, | |

|

|

333 | "mappings": {"log": metric_mapping}, | |

|

|

334 | } | |

|

|

335 | ||

|

|

336 | Datastores.es.indices.put_template("rcae_metrics", body=metrics_template) | |

|

|

337 | ||

|

|

338 | uptime_metric_mapping = copy.deepcopy(shared_log_mapping) | |

|

|

339 | uptime_metric_mapping["properties"]["uptime_id"] = uptime_metric_mapping[ | |

|

|

340 | "properties" | |

|

|

341 | ]["pg_id"] | |

|

|

342 | del uptime_metric_mapping["properties"]["pg_id"] | |

|

|

343 | ||

|

|

344 | uptime_metrics_template = { | |

|

|

345 | "template": "rcae_uptime_ce_*", | |

|

|

346 | "settings": { | |

|

|

347 | "index": { | |

|

|

348 | "refresh_interval": "5s", | |

|

|

349 | "translog": {"sync_interval": "5s", "durability": "async"}, | |

|

|

350 | }, | |

|

|

351 | "number_of_shards": 5, | |

|

|

352 | "analysis": shared_analysis, | |

|

|

353 | }, | |

|

|

354 | "mappings": {"log": shared_log_mapping}, | |

|

|

355 | } | |

|

|

356 | ||

|

|

357 | Datastores.es.indices.put_template( | |

|

|

358 | "rcae_uptime_metrics", body=uptime_metrics_template | |

|

|

359 | ) | |

|

|

238 | 360 | |

|

|

239 | 361 | |

|

|

240 | 362 | def reindex_reports(): |

|

|

241 | 363 | reports_groups_tables = detect_tables("reports_groups_p_") |

|

|

242 | 364 | try: |

|

|

243 | Datastores.es.indices.delete("rcae_r*") | |

|

|

365 | Datastores.es.indices.delete("`rcae_r_*") | |

|

|

244 | 366 | except elasticsearch.exceptions.NotFoundError as e: |

|

|

245 | 367 | log.error(e) |

|

|

246 | 368 | |

| @@ -264,7 +386,7 b' def reindex_reports():' | |||

|

|

264 | 386 | name = partition_table.name |

|

|

265 | 387 | log.info("round {}, {}".format(i, name)) |

|

|

266 | 388 | for k, v in es_docs.items(): |

|

|

267 |

to_update = {"_index": k, "_type": "report |

|

|

|

389 | to_update = {"_index": k, "_type": "report"} | |

|

|

268 | 390 | [i.update(to_update) for i in v] |

|

|

269 | 391 | elasticsearch.helpers.bulk(Datastores.es, v) |

|

|

270 | 392 | |

| @@ -322,7 +444,7 b' def reindex_reports():' | |||

|

|

322 | 444 | name = partition_table.name |

|

|

323 | 445 | log.info("round {}, {}".format(i, name)) |

|

|

324 | 446 | for k, v in es_docs.items(): |

|

|

325 |

to_update = {"_index": k, "_type": " |

|

|

|

447 | to_update = {"_index": k, "_type": "report"} | |

|

|

326 | 448 | [i.update(to_update) for i in v] |

|

|

327 | 449 | elasticsearch.helpers.bulk(Datastores.es, v) |

|

|

328 | 450 | |

| @@ -331,7 +453,7 b' def reindex_reports():' | |||

|

|

331 | 453 | |

|

|

332 | 454 | def reindex_logs(): |

|

|

333 | 455 | try: |

|

|

334 | Datastores.es.indices.delete("rcae_l*") | |

|

|

456 | Datastores.es.indices.delete("rcae_l_*") | |

|

|

335 | 457 | except elasticsearch.exceptions.NotFoundError as e: |

|

|

336 | 458 | log.error(e) |

|

|

337 | 459 | |

| @@ -367,7 +489,7 b' def reindex_logs():' | |||

|

|

367 | 489 | |

|

|

368 | 490 | def reindex_metrics(): |

|

|

369 | 491 | try: |

|

|

370 | Datastores.es.indices.delete("rcae_m*") | |

|

|

492 | Datastores.es.indices.delete("rcae_m_*") | |

|

|

371 | 493 | except elasticsearch.exceptions.NotFoundError as e: |

|

|

372 | 494 | log.error(e) |

|

|

373 | 495 | |

| @@ -401,7 +523,7 b' def reindex_metrics():' | |||

|

|

401 | 523 | |

|

|

402 | 524 | def reindex_slow_calls(): |

|

|

403 | 525 | try: |

|

|

404 | Datastores.es.indices.delete("rcae_sc*") | |

|

|

526 | Datastores.es.indices.delete("rcae_sc_*") | |

|

|

405 | 527 | except elasticsearch.exceptions.NotFoundError as e: |

|

|

406 | 528 | log.error(e) |

|

|

407 | 529 | |

|

|

1 | NO CONTENT: modified file | |

| The requested commit or file is too big and content was truncated. Show full diff | |||

|

|

1 | NO CONTENT: modified file | |

| The requested commit or file is too big and content was truncated. Show full diff | |||

|

|

1 | NO CONTENT: modified file | |

| The requested commit or file is too big and content was truncated. Show full diff | |||

|

|

1 | NO CONTENT: modified file | |

| The requested commit or file is too big and content was truncated. Show full diff | |||

|

|

1 | NO CONTENT: modified file | |

| The requested commit or file is too big and content was truncated. Show full diff | |||

|

|

1 | NO CONTENT: modified file | |

| The requested commit or file is too big and content was truncated. Show full diff | |||

|

|

1 | NO CONTENT: modified file | |

| The requested commit or file is too big and content was truncated. Show full diff | |||

|

|

1 | NO CONTENT: modified file | |

| The requested commit or file is too big and content was truncated. Show full diff | |||

|

|

1 | NO CONTENT: modified file | |

| The requested commit or file is too big and content was truncated. Show full diff | |||

|

|

1 | NO CONTENT: file was removed |

|

|